Efficient, abusive, or both? Ways to evaluate unilateral conduct

Efficiency arguments play a role in abuse of dominance investigations, but often only implicitly and at the end of the process. Established quantitative techniques can help to make efficiency analysis more integral in abuse cases. Such techniques are often considered by regulators when concluding regulatory settlements. Can competition authorities use them too?

Investigations into abuse of dominance or monopolisation (often referred to as unilateral conduct cases) typically focus on the market position of the company under investigation—i.e. whether it has a dominant position or monopoly power—and then on the effects of the conduct on competition and consumers. If significant anticompetitive effects are found, the conduct is considered abusive.

This article builds on a presentation by Oxera to the Unilateral Conduct Working Group of the International Competition Network on 27 October 2016.

In many jurisdictions, the accused company then still has the option to demonstrate that its conduct had an objective justification and offsetting benefits.1 Efficiency arguments could be part of this justification. But at that stage of the process, the burden of proof to show that the efficiencies outweigh the anticompetitive effects tends to be rather high. According to one study, in the few recent abuse of dominance cases where the European Commission has considered explicit efficiency justifications in a transparent manner, they have been rejected.2

That is not to say that efficiency considerations play no role in competition authorities’ thinking. Authorities will often take efficiency into account when selecting which types of conduct to investigate in the first place. Certain practices are rarely investigated precisely because they have efficiency benefits (for example, certain forms of price discrimination and bundling). A commonly used test for assessing unilateral conduct by dominant companies is whether the conduct is likely to harm as-efficient competitors; this test does, by definition, involve efficiency considerations.

However, there may be advantages to bringing efficiency arguments more to the fore in abuse of dominance investigations, rather than considering them at the end as part of objective justifications. Canada is one of the few (if not the only) jurisdictions where this is done (see the box below), which opens the way for a more quantitative assessment of efficiencies.

Efficiency in unilateral conduct cases: the Canadian framework

In Canada, a three-part cumulative test is applied.1 It asks:

- Does the firm have a dominant position?

- Is the dominant firm engaging in an anticompetitive practice—i.e. abusing its dominant position?

- Does the anticompetitive practice by the dominant firm have, or is it likely to have, the effect of prevention or substantial lessening of competition?

In this system, efficiencies are considered in step 2. If the dominant firm engages in a conduct with a clear efficiency rationale, the conduct may not be considered anticompetitive.2 In this case, there is no need for the assessment of effects on competition in step 3. However, once the conduct is deemed anticompetitive, after any expected efficiencies are taken into account in step 2, such efficiencies can not be used as a defence in the consideration of effects.

Note: 1 See Competition Act, subsection 79(I). 2 The notion of necessity comes into play here: if the efficiency rationale could have been fulfilled with a less restrictive conduct, the conduct may be difficult to defend.

Where efficiencies come in

From an economic perspective, efficiency considerations lie at the heart of competition analysis of unilateral conduct, and indeed competition policy more generally. In simple terms, competition is assumed to increase welfare because it allows efficient firms to grow, and ultimately drives inefficient ones out of the market. In practice, the recognition of efficiency in unilateral conduct cases has remained more theoretical than applied.

At a high level, two types of efficiencies can be distinguished:

- efficiencies in consumption, accruing directly to the customers of the firm engaging in unilateral conduct (also known as demand-side efficiencies);

- efficiencies in production, accruing to the firm in the form of cost savings, and passed on to its customers to a greater or lesser extent through lower prices or higher quality.

An example of the former might be changes in product offering that are valued by consumers, while the latter might include cost savings that can lead to a sustained reduction in prices.3

Demand- and supply-side efficiencies are considered in turn below.

Efficiencies that benefit customers directly

The evaluation of demand-side efficiencies, or efficiencies in consumption, involves a context-specific quantification of customer benefits. Oxera has undertaken such an analysis for Flybe, a regional airline, in the context of a predation allegation, which was investigated in 2011 by the UK Office of Fair Trading (OFT, now merged into the Competition and Markets Authority).4 The analysis is summarised in the box below. At its core was a comparison of the situations with and without the conduct, some aspects of which mattered to consumers. By assigning valuations to it, the analysis quantified the impact of the conduct, for example in terms of the difference between direct and indirect flights on a given route, and consumers’ valuation of the difference in travel time.

Efficiency arguments in the Flybe predation case (2011)

Flybe, a regional airline that had entered the Newquay–London Gatwick route, was accused of predatory pricing by the incumbent regional airline, Air South West (ASW). Flybe ran an efficiency defence, arguing that ASW’s costs should not be used as a benchmark because it operated suboptimal aircraft. It also argued that there was untapped demand due to ASW’s lack of a hub and absence of marketing in London. ASW’s flight was an indirect one via Plymouth, and was therefore less attractive to passengers. Flybe quantified the consumer benefits of its entry at £4.48m, realised in the form of lower fares, increased frequency, the addition of direct flights to the offering, and sales via its global distribution systems.

These efficiency arguments and quantification could have formed an integral part of the assessment of the competitive effects of Flybe’s conduct. However, the OFT did not consider these points in detail in its effects analysis, in part because it was concerned that these benefits to consumers could arise from the predation itself, and therefore might not be sustained. Instead, the OFT applied an as-efficient competitor test, focusing on the costs and revenues of Flybe. It noted that having revenues below AAC (average avoided costs) for a period after entry on a new route was inherent to the economics of the airline market. It did not find predation, and closed the investigation.

Production efficiencies

Supply-side efficiencies can be explained through the following example: in a margin squeeze allegation, the as-efficient competitor test examines the margins of the entity accused of the anticompetitive conduct.5 If the margin between retail and wholesale price covers the costs of the alleged abuser, the test rejects the allegation. This is because the dominant firm’s wholesale pricing would allow an equally efficient competitor operating only at the retail level to make a positive profit. If, on the other hand, the dominant firm’s pricing was not covering its own retail costs, then an equally efficient rival would make a loss and would eventually be forced out of the market, leading to a reduction in average efficiency.

In network industries, characterised by significant scale economies, a large incumbent may be able to cover its costs comfortably, while a small entrant may require a period of time to reach a comparable productive scale. The as-efficient competitor test uses the costs of the large incumbent as an efficiency benchmark, disadvantaging the smaller entrant by construction. This would mean that pushing the entrant out of the market would increase overall efficiency. In some cases this static approach to thinking about efficiency is not optimal in the long run.

The idea that it takes time for an entrant to reach productive scale size is behind the ‘reasonably efficient competitor test’ (a more descriptive term would be ‘not-yet-as-efficient competitor test’). In applying such a test, the regulator or competition authority protects a new competitor to allow it to grow and become comparably efficient. The aim is to bring about effective competition in future. One problem with this test is that an entrant may be inefficient in ways that are not due to small scale alone. If the entrant has some technical inefficiency (for example, due to poor managerial conduct or decision-making, which could also apply to the incumbent), it is arguably not reasonably efficient. Assessment of the type of inefficiency is therefore central to the application of the reasonably efficient competitor test.

The assessment of reasonably efficient costs is complicated by an information asymmetry between the authority and the companies in the industry. While the as-efficient competitor test uses the costs of the dominant company as a benchmark, the reasonably efficient competitor test involves an element of judgement. How should the authority adjust the actual costs of the entrant to offset the disadvantage it suffers because of its small scale, while avoiding the protection of technically inefficient competitors?

This is an area that has received little attention in the assessment of unilateral conduct, but relevant techniques already exist in other areas. For example, quantitative techniques are routinely applied in efficiency benchmarking exercises undertaken in the regulation of monopolies, or in the application of the so-called fourth Altmark criterion in state aid investigations.6 The same techniques could be applied where competition enforcement involves asking questions about efficiency (typically about costs, but they can also involve other notions of efficiency).7

Where quantitative techniques come in

Competition policy has embraced the use of sophisticated quantitative tools in many areas. While the measurement of cost efficiency in abuse cases is currently not one of these, there is no reason why this should not change. Efficiency arguments are already frequently assessed in merger control.8 Outside of competition policy, regulators commonly use sophisticated efficiency techniques.

In the regulatory context, the inherent information asymmetry between the firm and the regulator is mitigated by using data from multiple companies wherever possible.9 Typically, regulators aim to use a wide evidence base, and information from comparative efficiency analysis is cross-checked with company evidence on efficiencies to obtain a consensus view, while ensuring that the results are not overly sensitive to the assumptions adopted for the assessment.

A high-level distinction can be made between techniques based on econometric methods (regression analysis, and its extension in the form of stochastic frontier analysis—SFA),10 and techniques based on mathematical optimisation (data envelopment analysis—DEA)11 Both are widely accepted by regulatory authorities around the world, and are also used in combination (for example, in Germany and Austria). They are designed to enable like-for-like comparisons that account for external factors that could affect an organisation’s efficiency. Software packages are available for applying both techniques.

The example of DEA is discussed in the box below, together with its application in assessing efficiencies. Similar points could be made for SFA.

Using data envelopment analysis to benchmark costs

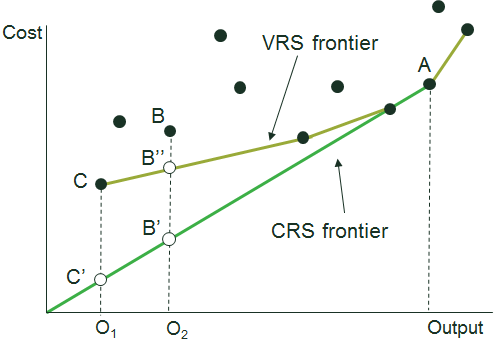

In DEA, unlike in regression analysis or SFA, no relationship needs to be assumed ex ante between the resources in question (e.g. cost) and the outcomes achieved. Data requirements for DEA are also less onerous. For each company, it is possible to point to observed peers that operate more efficiently and follow best practice (if the particular company is deemed inefficient). In the example in the figure below, company A operates on a larger scale than company B or company C. Suppose the authority wishes to determine the unit costs of an equally efficient and a reasonably efficient operator, both relative to A. In this example, B and C are not ‘equally efficient operators’ relative to A, as the output-to-cost ratio is highest at A.

Specifically, at the scale size of B:

- B’ (a hypothetical company) has the costs of an equally efficient competitor relative to A (i.e. the output-to-cost ratios at B’ and A are equal);

- B’’ (a hypothetical company) has the costs of a reasonably efficient competitor relative to A (i.e. the output-to-cost ratio at B’’ is optimal given its scale).

Figure 1 Undertaking the as-efficient and reasonably efficient competitor tests using DEA

Source: Oxera.

The two boxes below provide examples of where SFA and DEA have been applied to assess efficient costs, given operator characteristics such as scale size and other extenuating circumstances—i.e. what in a margin squeeze context might be classified under ‘reasonably efficient costs’.

Cost-efficiency assessment of Royal Mail’s delivery offices for the 2006–10 price control period

Oxera estimated efficient costs for Royal Mail’s delivery offices using internal benchmarking and ordinary least squares regression, and SFA and DEA techniques. This made it possible to compare a given delivery office with others that were similar in terms of mix of outputs, scale size and operator characteristics. Once output volumes and other exogenous features were controlled for, the remaining differences in labour costs could be attributed to inefficiency. Returns to scale in delivery offices were found to be variable. This implied a higher overall efficiency than was found by the regulator using a constant returns to scale assumption.

Source: Horncastle, A., Jevons, D., Dudley, P. and Thanassoulis, E. (2006), ‘Efficiency Analysis of Delivery Offices in the Postal Sector Using Stochastic Frontier and Data Envelopment Analyses’, chapter 10, pp. 149–64, in A. Crew and P.R. Kleindorfer (eds), Liberalization of the Postal and Delivery Sector, Edward Elgar.

‘Well-run undertaking’ assessment when evaluating the fourth Altmark criterion for a transport operator in a state aid case, 2016

In the context of a contract for compensation granted for a public service obligation in the transport industry, Oxera applied the ‘well-run undertaking’ test. A review of communications and decisions by the European Commission and the European Free Trade Association Surveillance Authority indicated that a variety of approaches, including examining key performance indicators and regressions, were considered suitable for assessing whether the fourth Altmark criterion had been met. Based on this, Oxera undertook a regression analysis of comparable public service obligation contracts. Efficient costs were then predicted from the estimated regression model, given the specific characteristics of the contract under consideration. Oxera concluded that the projected costs for the contract under consideration reflected those of a well-run undertaking.

Source: Oxera.

Data requirements

Before considering the application of comparative efficiency techniques in unilateral conduct investigations, a competition authority should ask at least the following questions.

- Is the necessary data available for producing quantitative evidence on cost efficiency?

- Is detailed information required on the production processes relevant to the industry in question?

- What, specifically, does the application of quantitative efficiency techniques add over and above what can be achieved through a qualitative assessment of scale disadvantage?

The concept of efficiency considered here is comparative, and the use of only one data point (in terms of benchmark performance) may therefore not suffice. A variety of sample sizes have been used in regulatory practice. For example, the German networks regulator, the Bundesnetzagentur, models the comparative cost efficiency of electricity distribution for just under 200 system operators12 using SFA and DEA separately, and selects the result that is most favourable to a distribution operator.13 It also models the comparative efficiency of gas transmission service operators, of which there are only 13.14 The sample size has to be taken into account in the specification of the model, but rarely precludes the use of comparative techniques. Regulators across the world obtain productivity information on competitive sectors in the economy from publicly available data sources such as EU KLEMS and the OECD to determine a benchmark performance or expectation for the regulated entity in question.15

Comparative efficiency techniques allow us to create a benchmark for a given set of operator characteristics, and therefore (for example) to derive a concrete measure of reasonably efficient costs. The parallel might be the prediction of hypothetical infringement-free prices in cartel overcharge estimation, or the prediction of the counterfactual prices in a more concentrated market when applying price concentration analysis in merger investigations.

The problem with qualitative ad hoc adjustments for scale (and the argument can be extended to other unavoidable factors) is that they can lead to a confusion between scale inefficiency and other types of inefficiency. The competition authority will wish to hold the entrant responsible for pure managerial inefficiency, while giving it time to reach the most productive scale, or at least a scale that is comparable to that of the incumbent (as shown in the figure in the box above, where company A is the incumbent). A key strength of quantitative comparative efficiency techniques is that they can disentangle different components of inefficiency, and the economic framework is well established in the academic literature.

Concluding remarks

Concerns around the availability of data are not unique to efficiency techniques. All quantitative evidence is imperfect and comes with a degree of uncertainty about data quality and the appropriate model. Empirical results are not meant to dictate a decision by an authority, but to provide the authority with additional evidence to help its decision-making. Quantitative efficiency techniques are just like other quantitative techniques: one cannot base decisions on their results alone, but they have their place in the competition policy toolbox, and there is no reason why these should not be applied in unilateral conduct analysis.

1 According to one study, an efficiency defence or other objective justification was put forward in 42% of cases that received a final European Commission decision between 2009 and 2013. Friederiszick, H.W. and Gratz, L. (2013), ‘Dominant and efficient—on the relevance of efficiencies in abuse of dominance cases’, The Role of Efficiency Claims in Antitrust Proceedings, OECD Policy Roundtables, 2 May.

2 Faella, G. (2016), ‘The Efficient Abuse: Reflections on the EU, Italian and UK experience’, Competition Law and Policy Debate, 2:1, March.

3 As in merger investigations, variable and fixed cost savings are likely to be treated somewhat asymmetrically, as it is assumed that only the former can have a direct impact on pricing.

4 Office of Fair Trading (2010), ‘No Grounds for Action Decision: Alleged Abuse of a Dominant Position by Flybe Limited’, Case No. MPINF-PSWA001-04, December. See also Oxera (2011), ‘No-fly zone? A curious case of alleged predation by a new entrant’, Agenda, July. Oxera advised Flybe during this case.

5 For example, see Case T-271/03 Deutsche Telecom AG v Commission (Judgment of 10 April 2008); and Case COMP/38.784 Wanadoo España v Telefónica (Decision of 4 July 2007).

6 Altmark, case C-280/00, Judgment of 24 July 2003.

7 For detailed discussion, see Kumbhakar, S., Wang, H.-J. and Horncastle, A. (2015), A Practitioner’s Guide to Stochastic Frontier Analysis Using Stata, Cambridge University Press.

8 For example, purchasing efficiencies were considered in the Nynas/Shell (European Commission (2013), Comp/M.6360 – Nynas/Shell/Harburg Refinery, section 7.4.4.3.2.) and GE/Alstom (Federico, G. (2016), ‘Efficiencies in merger control: recent EC experience’, CRA Lunchtime talk, 12 October) merger cases. The suite of available quantitative techniques is, however, much richer than the methods applied by authorities might suggest. For efficiencies in mergers, see Jenkins, H. (2013), ‘Efficiency assessments in European competition policy and practical tools’, in OECD (2013), ‘The Role of Efficiency Claims in Antitrust Proceedings’, 2 May.

9 Where industries have a regional structure, or where internal benchmarking of comparable sub-units is feasible. In some instances, regulators have conducted international benchmarking in order to undertake comparative analysis.

10 In SFA, unlike in DEA, it is possible to account for noise in the data and distinguish it from inefficiency estimated from the model.

11 Parthasarathy, S. (2010), ‘A computationally efficient procedure for Data Envelopment Analysis’, PhD thesis, available from London School of Economics.

12 In 2013, the number was 179. See Bundesnetzagentur (2015), ‘Efficiency benchmarking’, 13 July.

13 See Incentive Regulation Ordinance (ARegV), section 12.

14 See Bundesnetzagentur (2008), ‘Beschluss wegen Festlegung der kalenderjährlichen Erlösobergrenzen für die zweite Regulierungsperiode Gas (2013 bis 2017) (Beschlusskammer 9)’.

15 See Oxera (2016), ‘Study on ongoing efficiency for Dutch gas and electricity TSOs’, prepared for Netherlands Authority for Consumers and Markets (ACM), January.

Download

Related

Economics of the Data Act: part 1

As electronic sensors, processing power and storage have become cheaper, a growing number of connected IoT (internet of things) devices are collecting and processing data in our homes and businesses. The purpose of the EU’s Data Act is to define the rights to access and use data generated by… Read More

Adding value with a portfolio approach to funding reduction

Budgets for capital projects are coming under pressure as funding is not being maintained in real price terms. The response from portfolio managers has been to cancel or postpone future projects or slow the pace of ongoing projects. If this is undertaken on an individual project level, it could lead… Read More